What GPT-5.1 and Gemini 3 Mean for Math Teaching

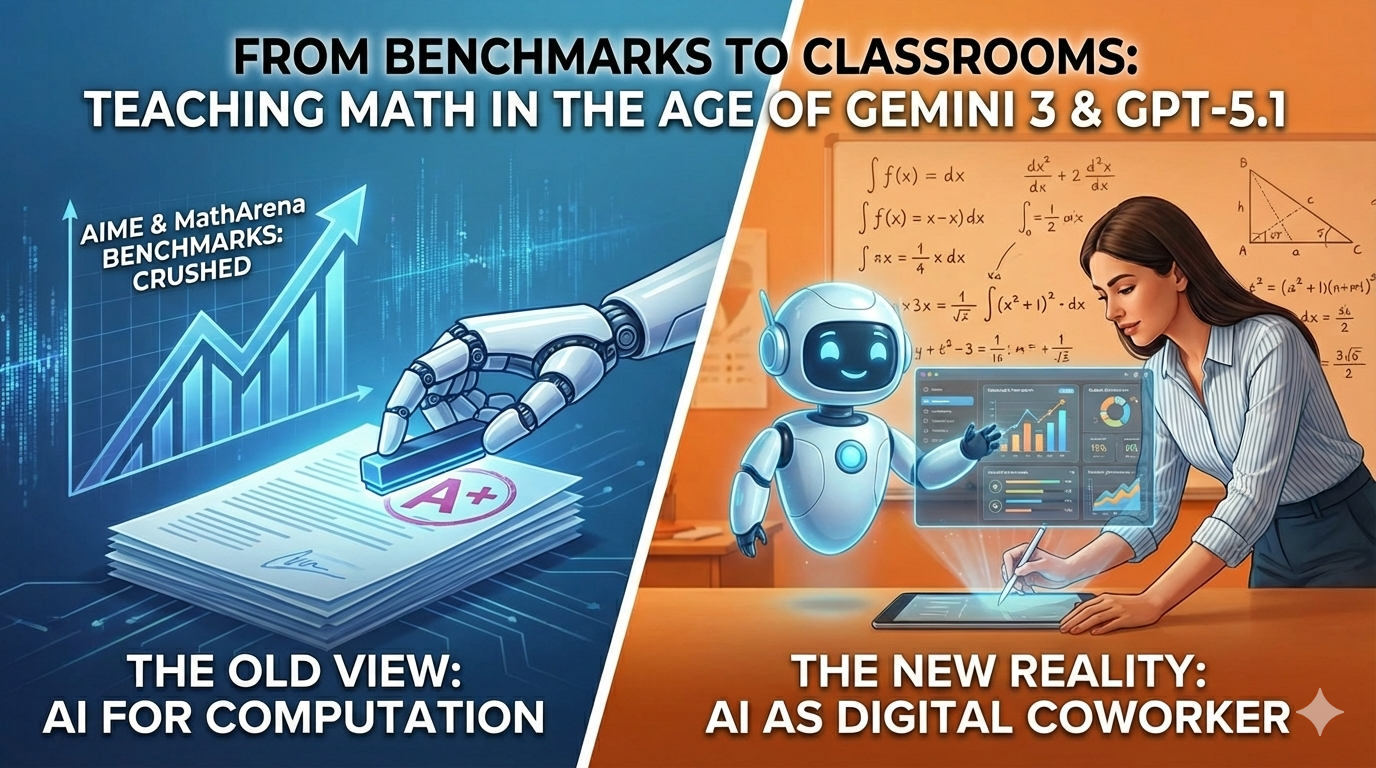

In math, new benchmarks such as AIME and MathArena show GPT-5.1 and Gemini 3 can reliably solve problems far beyond most secondary and early-college courses, confirming huge gains in problem-solving power.

But — as I point out in my recent newsletter post — these tests measure strength, not teaching: they say nothing about age-appropriate explanations, diagnosing misconceptions, or guiding productive struggle. New models’ agentic and vision abilities—analyzing messy handwritten work, building concept maps, spotting error patterns—mean AI can now function as a powerful but fallible teaching assistant.

For educators, the core task is shifting toward “teacher-manager”: deciding where in the learning process AI should appear, what work it should handle, and how to keep humans in the loop so benchmark gains actually deepen student understanding.